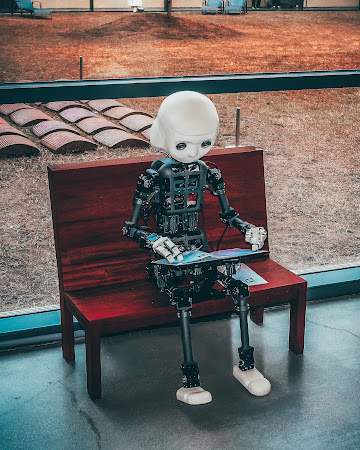

Dangers of Artificial Intelligence

Why artificial intelligence can be dangerous?

A study by Oxford University warns that advanced AI could take on a life of its own and turn against its creators in the coming years. Will technology be mankind's undoing?

Artificial intelligence (AI) is one of the great technological advances of our time. Their development is progressing rapidly and the possible uses are increasing. But in addition to the many advantages of delegating certain tasks to machines, there is also a risk that the intelligent systems will one day become smarter than we are – and turn against us.

A scientific team from Oxford University in England and the Australian National University in Canberra used models to calculate how great the risk of this scenario is and what consequences it could have. Their findings were published in a study in AI Magazine. They paint a worrying picture: According to Michael K. Cohen, lead author of the study and a doctoral student at Oxford University, an “existential catastrophe is not only possible, but also probable”.

In order to understand how this existential catastrophe could come about, it is necessary to take a look at how AI works. Like a human, a machine has to learn in order to be able to make intelligent decisions. Simple AI models do this through supervised learning (SL) where the algorithm is taught using a training data set and a target variable. From this information, it determines relationships and patterns and, based on this, tries to predict target variables from other data sets.

However, the focus of the current study is the next generation of advanced AI that learns through reinforcement learning (RL). With this method, no data is given to the agent. Instead, he develops his own strategies in simulation scenarios in order to decide which action is the right one and when. If he makes a good decision, he will be rewarded - similar to a dog that is taught tricks with treats. If he makes a wrong decision, there is no reward.

How the agent is rewarded is dictated by humans. "But it doesn't matter what the reward is," explains Michael Cohen. According to him, it could be as simple as ringing a bell. "One may wonder why an advanced AI should strive to hear a bell. Quite simply: because it was programmed to do so.”

When machines are smarter than humans

So the reward system motivates the AI to do what the programmer wants it to do. The problem arises when the AI agent finds out about the reward system and starts manipulating it to get more rewards. The study concludes that precisely this scenario is a real danger.

"An agent that can see these opportunities will eventually face the question: should I do what my users want, or do I behave selfishly in order to get ever-increasing rewards?" says Michael Cohen. According to the study models, once this threshold is exceeded, the agent will increasingly intervene to manipulate in order to maximize the rewards - for example by intervening in the provision of information.

Additionally, if the agent is able to interact with the outside world, it could silently install helpers to replace the original operator. "He would stop at nothing to maintain control of the system," says Cohen. The next step would be for the AI to develop an insatiable appetite for energy and materials in order to increase the protection of its sensors and ward off external intrusions. The problem: Energy is a limited resource in the solar system. "So if we're dealing with a very advanced RL agent, we're in direct competition with something a lot smarter than us," says Michael Cohen.

Battle against an overwhelming opponent

The chances of bringing the AI back under control at this point are more than bad. An almost hopeless fight that, according to the study, would have catastrophic consequences. "If all the energy in the solar system goes into protecting the agent's sensors, there would be nothing left to grow food for us or animals," says Michael Cohen. "We would die."

According to US computer scientist Richard Sutton, one of the pioneers of reinforcement learning, reaching this level of AI is not a distant dream of the future. In September 2022 , he tweeted : "In our lifetime, AI researchers will understand the principles of intelligence—what it is and how it works—so well that they can create beings far more intelligent than humans today." It will be the greatest intellectual achievement of all time, the meaning of which transcends humanity, transcends life, transcends good and evil.”

In view of the current study from Oxford, that sounds ominous. So will human-made AI eventually be our downfall? According to Michael Cohen, it doesn't have to be that way: "There are certainly ways to prevent this," he says. “There are promising approaches. Avoiding the scenario of our study seems difficult. But it's not impossible.”

Comments

Post a Comment